Since 2015, Prem Natarajan had been watching from the outside and with growing interest the emergence of Alexa.

In June 2018, he joined Amazon and began work on the Hindi project, says Bibhu Ranjan Mishra.

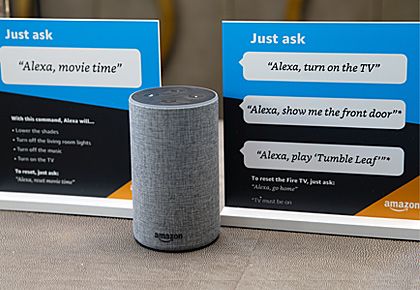

Ask her what makes her so intelligent that she can learn languages in no time, and she may start waffling about the technologies that are vital for a conversational chat bot: Artificial intelligence, machine learning, deep learning, natural language processing, big data and so on...

What she probably won't tell you is that special learning methods are making her relentlessly smarter and more capable with each passing day and that a whole team works behind the scenes to develop those methods.

These efforts resulted in Amazon announcing last month that Alexa can now speak and understand Hindi.

Business Standard caught up with the man who has been driving the Hindi project -- Chennai-born Prem Natarajan -- when he was on a brief visit to Amazon India headquarters in Bengaluru.

He is based in Los Angeles where he works as vice-president, Alexa AI and head of Natural Language Understanding at Amazon.

After a PhD in machine learning from Tufts University in Boston, he spent over two decades working in the US with R&D centric companies and on studies in optical character and handwriting recognition, speech recognition and natural language processing.

Since 2015, Natarajan had been watching from the outside and with growing interest the emergence of Alexa.

In June 2018, he joined Amazon and began work on the Hindi project.

A new R&D team of scientists, data linguists, and language engineers was set up at the Bengaluru office under his supervision.

The task was complex.

"When we looked at Hindi, there were multiple challenges.

"The first thing is the unavailability of data.

"Then, unlike most other global languages, neither the spelling nor the pronunciation is standardised in Hindi," said Natarajan.

Take the word for love -- pyaar.

While it is spelt as pyar in the movie Dil Vil Pyar Vyar, it is spelt differently in Pyaar Ka Punchnama.

It means that when someone does a search, it restricts the ability of the search engine to connect with the content because there isn't any standardised representation.

Similarly, in the Hindi script, Devanagari, the same word can be spelt differently in different places, leading to a huge variability.

For example, while in some places people say dariya (river), in other places it is spelt as daria.

Then comes the issue of local dialects and accent variation which makes things even more fiendishly difficult.

All this meant his team had to set up a whole new processing workflow for representing the colloquial Latin representation of Hindi words and for coming up with standardised words.

Typically, for all other languages, including Indian English, Amazon used what is called catenative Text to Speech (TTS) for the first launch; this means the speech and speech patterns are catenated, or broken down, into different segments which are then combined into a new sound.

But with Hindi, the Alexa team decided to use 'neural TTS' which is known as the most sophisticated machine learning model.

The hallmark of neural TTS is that it directly generates speech from the input text, making it sound far more natural.

Another headache for Natarajan's team was the growing list of new words whenever a new Hindi film was released.

If someone asks Alexa to play songs from the Bollywood film Piku, the word 'Piku' will be a new word for the computer.

If the technology does not address that, it can lead to a poor customer experience.

Tricky new problems called for new solutions.

Natarajan and his team had to invent some new techniques, including doing phonetic, rather than word searches, to do the matching.

"Of course, when you use phonemes and not words, your matches will be less precise as it may throw up results which may be unrelated.

"That means you have to solve the problem of how to improve precision.," said Natarajan.

It also meant that the learning by the machine has to be automated as it is not humanly possible to address any of these issues given the scale of the challenge.

Natarajan's team used the deep learning framework in order to come out with new learning methodologies such as transfer learning, active learning or self-learning to fasten the learning process for Alexa .

Machine learning typically is dependent on input data, speech signals, and transcriptions.

The algorithm learns from it and creates a model.

Then the inference engine takes that model and the new signal and produces the answer.

In this model, there is no way to give an input from a previously learned model.

"But with deep learning, it turned out that we can actually effectively leverage transfer learning in two ways.

"First, by moving reuse resources from one language to other.

"Second, by transferring the learning from domains where there is enough data (e.g. restaurants, movies etc.), to domains that don't have enough (recipes etc)," he said.

In active learning, one does not need vast amounts of training data to gain a certain level of performance.

"We have now shown that in some cases, we are able to reduce the amount of training data we need by a factor of 40," said Natarajan.

There is also self-learning where Alexa itself learns from the behaviour of users if the information that's been delivered is correct or not.

For example, when a child asks Alexa to play the ABC song and his parents, who are watching him, come to his aid by chipping in that he means the "alphabet song", the machine understands that both are similar.

The next time the child -- or anyone -- asks for the ABC song, it will rewrite the query as 'alphabet song' and deliver the right song.

According to Natarajan, the lessons learnt from launching Hindi will help the R&D team come out with innovations.

"We may have never developed a phonetic search just for English.

"Because it was not that big a problem.

"But now that it's available, it can help improve performance in other countries," he said.

© 2025

© 2025